VIRCOM BLOG

Grow Faster with Enhanced SMB Email Protection

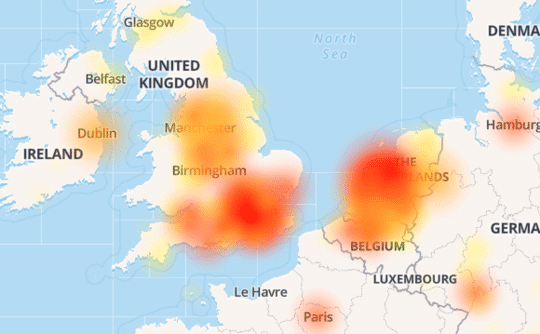

Office 365 Email Outages: What’s Your Backup Plan?

Imagine coming into your office, firing up the computer…and not getting any email. For some, this might seem like a dream scenario but only if

The Rise of URL Defense

URL Defense Is Now Essential for Combatting Evolving Email Threats As cybersecurity threats like targeted email phishing attacks surge, one truth remains clear: email is

Microsoft Defender for Microsoft 365 (Formerly ATP): Upside and Pitfalls

Microsoft Defender for Microsoft 365 – the evolution of Microsoft 365 Advanced Threat Protection (ATP) – is frequently cited as a robust solution aimed at

How Many Email Addresses Can You Send to at once in Outlook or using Microsoft 365 systems?

Have you ever wondered how many email addresses Microsoft Outlook and Microsoft 365 can handle when sending a single message? While Outlook is a powerful

How-to Setup a basic VPN connection with Windows Server (2016, 2022)

For many small businesses and IT teams, cybersecurity starts with securing how users connect to internal systems. One of the simplest ways to improve remote

How to Send a Message from Telnet

Believe it or not, Telnet, while old-school, is still a fast, reliable tool for fixing email issues. Newer tools may be easier to use, but

Ready to See the Difference?

Discover our advanced security products today.